A/B Testing: A Beginner’s Guide

Learn the basics of A/B testing and how it can help optimize your marketing campaigns with this beginner's guide from Factors.ai. Boost your results today.

Here's a handy beginner's guide on the basics of A/B testing that covers what A/B testing is, why it's important, how to perform a robust test, and more! This should be a great introduction for those looking to dive into the world of optimisation.

What Is A/B Testing?

A/B testing is a strategy that, simply put, allows you to compare two versions of something and find out which version performs better.

Marketers use this technique to compare two or more versions of their websites, adverts, emails, pop-ups, or landing pages against each other to see which version is most effective. In A/B testing, A refers to ‘control’ or the original version and B refers to ‘variation’ or the new version. A/B tests can provide both qualitative and quantitative insights for the marketer. It usually falls under the larger umbrella of Conversion Rate Optimization or CRO.

To illustrate an example, you might test two different Google Ads to see which one drives more purchases or you might want to test two versions of a CTA button on a webpage to see which version leads to more webinar sign-ups. The version that drives more visitors to take the desired action (click on the ad, sign up for the webinar, etc) is the winner.

Why Does it Matter?

A/B testing is a great way to field-test ideas before finalising implementation. A/B testing helps you track impact of the changes on key metrics like conversion rates, drop off rates, etc. Thereby providing key insights on how effective the changes are going to be. Secondly, leaders don’t want to make decisions unless there is strong evidence for them, particularly when they have to incur costs. A/B testing helps databack ideas and decide where and how to invest the marketing budget. It is a great tool for creating effective marketing strategies.

Where do marketers use A/B testing?

Almost any style or content element that is a customer-facing item can be evaluated using A/B testing.

Some common examples include:

- Website design and layout

- Email campaigns and personalised emails

- Social media marketing strategies

- Paid Adverts

- Newsletters

In each category, A/B tests can be conducted on multiple elements. For example, if you want to test your website design, you can test the colour scheme, layout, headings and subheadings, pricing page, special offers, CTA button designs, etc, amongst many other elements.

While the metrics for conversion are unique to each website, A/B tests can be used to collect data and understand user behaviour, user actions, the pain points, reception to new features, satisfaction with existing features, etc. The metrics however depend on the industry and type. For example, the metrics for B2B (new leads or deals won) will be different from their B2C and D2C counterparts (cart abandonment rate, total purchases, etc).

The Primary Types of A/B tests:

1. Split URL testing:

The simplest in concept — in split URL testing, two versions of a webpage url are compared with each other using webpage traffic to see which performs better on key metrics. It is the primary testing method for most organisations vying for website optimisation. However, this is not the best method to compare between two changes. It is mostly used to compare the original version with the new version that has some changes. More importantly, you can’t learn more about how different changes or elements interact with each or what combinations perform best.

2. Multivariate testing (MVT):

Multivariate testing allows the experimenter to compare multiple variables in the same test. This helps further what split URLs can do by overcoming their main limitation. Here, you can compare various combinations of the elements whose impact you’re trying to test. Good multivariate tests can combine all possible permutations to find which combination produces the best results. However, a large traffic is needed to be able to divide the traffic to face all the permutations of the webpage that is created by the traffic.

3. Multi-page testing:

Multi-page, as the name suggests, implements the changes being studied over multiple pages instead of a single page as is seen with simple split A/B tests. This helps understand how the changes impact the visitors in terms of how they interact with the different pages that they encounter on the website. This also helps maintain consistency when a visitor is met with a new variation that is being tested.

How to perform an A/B test

The A/B testing process can be summarised as follows...

1. Data Collection:

In the first stage, the marketers or experimenters collect data from their analytics softwares to look out for numbers like high and low traffic areas, pages with high and low conversion rates, and or drop-off rates. This helps understand how the webpage is currently performing.

2. Decide what features you want to test:

Here marketers decide what features on the website or webpage they want to track and identify the goals. In other words, the determining the key conversion metrics that they want to improve for those features.

3. Formulate hypothesis:

Here, one starts generating A/B testing ideas and formulating a hypothesis for why the changes will perform better in terms of impact on the metrics being tracked.

4. Create variations:

After the hypothesis has been created, giving direction and clarity to the marketer’s goals, create variations that will be tested against the current version. This is where the marketer will choose the method of testing as well as the A/B tool used for testing.

5. Run test:

After everything is in place, the only thing left to do is to run the test. Most A/B testers suggest around two weeks of testing on average. However, it varies based on the campaign, industry and traffic.

6. Analyse results:

Once the test is complete, the experimenter can interpret the results given by the A/B test. It is important to ensure that the result is statistically significant. In other words, if one version saw better results than the other version, the changes can be confidently attributed to the new changes (and not coincidences).

7. Make changes:

Finally, now that the marketer has data backing their new ideas or proposed changes, they can go ahead and implement them to reap the reward of a more effective variation on metrics such as conversion rates, drop off rates, click-through rates and so on.

How do A/B testing tools work?

In short, every A/B testing tool has a piece of code that decides which variation of the webpage, email or ad each visitor sees. It also collects the data for the visitors of each variation which helps you compare and analyse visitor behaviour.

This code works by incorporating the URL of the page(s) that are being tested. It also incorporates the metrics that you want to test. The results from this will determine which variation performs better. The tool’s cookies track visitors and opt them into the experiment. It will divert the traffic where half the visitors see version A (the control) and half see version B (the variant). The cookies track which version a particular visitor is opted into and measures their actions on the webpage towards the specified goal.

There are several tools on the market today for A/B testing including Hubspot’s A/B testing tool, Google Optimize, VWO, and Optimizely.

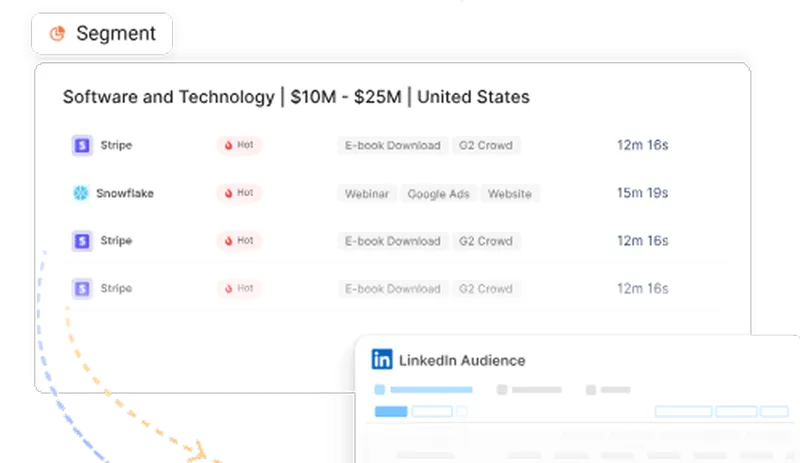

See how Factors can 2x your ROI

Boost your LinkedIn ROI in no time using data-driven insights

See Factors in action.

Schedule a personalized demo or sign up to get started for free

LinkedIn Marketing Partner

GDPR & SOC2 Type II

.svg)